Why Holography Is 3D’s Next Big Technology (And it’s nearer than you think!)

Posted on Jan 4, 2011 by Alex Fice

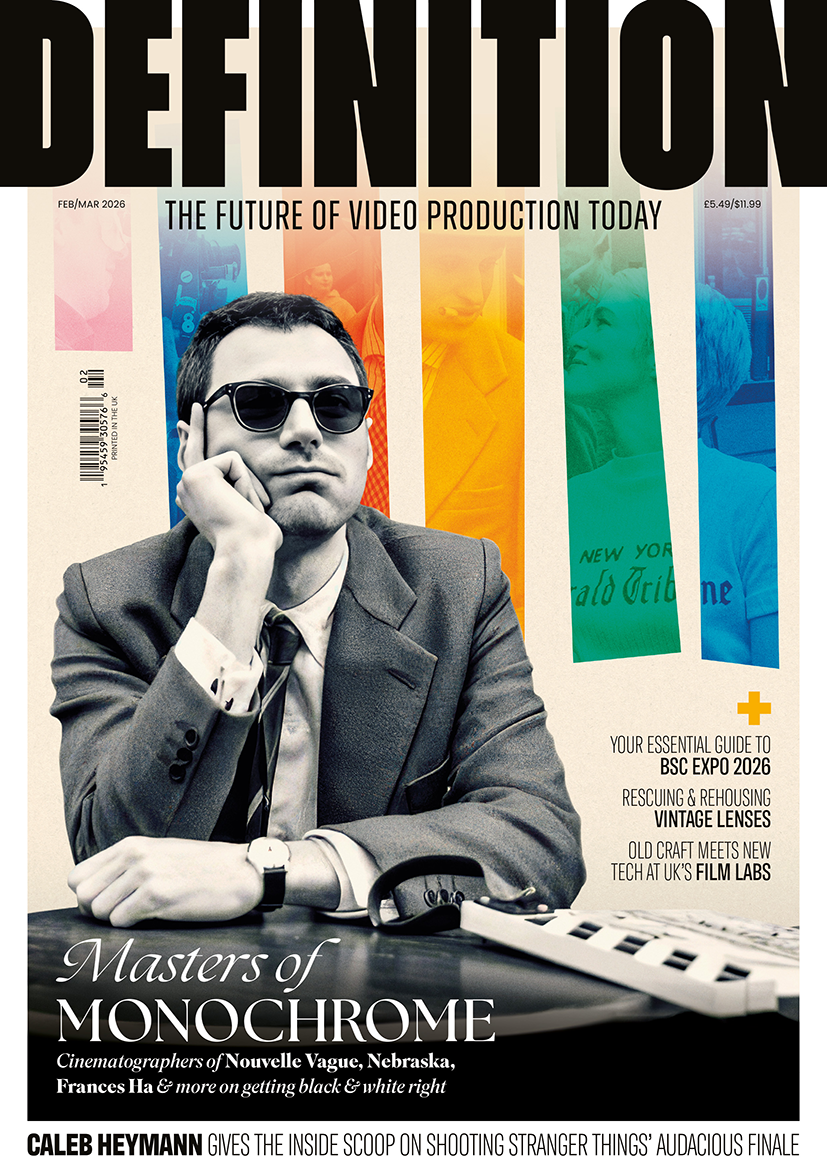

A typical set-up for Holographic 3D viewing – a new HDTV panel will be needed which carries twin cameras and refresh speeds of around 480Hz to keep around four people happy

Stereoscopic 3D has now really picked up a head of steam, a real momentum that can’t be stopped. But there are people who still believe Stereoscopic 3D is still a gimmick, we’re not talking film critics here but people whose view is based on fact not fiction. These feelings are mostly aimed at the unrealistic nature of dealing with depth with S3D and how depth percentages are either too small to deliver any significant dimension or too big in small bursts to provide a comfortable experience.

There is a new technology (albeit one that is sixty years old) called Holographic 3D that has been waiting in the wings as the next big thing but has been predicted as being at least eight or nine years away. Well it depends on who you talk to.

SeeReal are a company whose base is in Luxembourg and they have been in 3D R&D since 2002. They used to operate in S3D until they decided it was a transient technology and turned their attention to holography in 2005.

Hagen Stolle from SeeReal: “If you talk to the 3D broadcasters they will tell you that you only have the ability of around 4% depth ability, with Holography you don’t have this limitation because you use the same information as you see in real life.”

DoP and steadicam operator Paul ‘Felix’ Forrest has spent a long time looking at this technology as it would impact on cinematography. He explains the basics.

“Having spent a considerable amount of time with H3D and its creators it has gradually dawned on me that with such an incredible display technology, the prospects are that we will have to ‘finesse’ our shooting styles to really make the most of it.

“One of the most dramatic changes H3D brings about is the functionality, which allows several users watching the same set to focus on different scene points – concurrently! The easiest way to imagine this is to think of the difference between viewing a film versus being at the theatre.

“With the viewer having total control over what they actually focus on is one thing but the subtlety of H3D is that when each viewer picks their point of focus in the scene, the rest of the scene naturally blurs much the same as it does in the real world. Change your scene point, it is in focus and everything else is blurred. With this in mind, shooting the most practical and usable content to exploit H3D is likely to mean shooting at hyperfocal distance.

“Keeping in mind that H3D is totally compatible with 2D and more importantly with Stereoscopic 3D, there will always be traditionally shot content that will ‘adhere’ to our long established principles of cinematography. However, there will be an opportunity for a ‘new breed’ of content, which will force stronger narrative to ensure the ‘scene point of choice’ chosen by the viewer matches that visualized by the Director. Clearly, the utilisation of light, shadow and texture can be used to achieve similar effects without the need for shallow depth of field (as this is created naturally when scene points are viewed) and thus allow a full depth, in focus scene to be captured and eventually explored by the viewer.”

What about convergence?

Of course one of the common questions that crop up is based upon the fact that you still shoot left and right eye content and therefore still need to ‘dial in’ convergence. However, key areas of difference exist in respect of H3D specific content.

Whilst it is possible to shoot in the same fashion as adopted for Stereoscopic 3D (with shifting points of convergence), with H3D, it is also possible to shoot with a fixed point of convergence. Whilst it may be regarded as ‘good’ practice to continue to ‘pull convergence’ based upon where the action is (after all, the aim may be to make the content compatible with Stereo and Holo displays), early tests with H3D suggest that this may not be the best way to ‘optimise’ the scene.

Remembering that Holography affords the user a free choice for the point of focus, it becomes key to ensure that ‘scene scale’ is accurate. Parallax can always be adjusted with H3D display as long as the original scale proportions are maintained. Remembering also that parallax is the strongest depth cue, you can see that convergence still has a major role to play in capturing usable images. So, we can shoot with fixed convergence, as long as we have, and maintain the original scale proportions, we can adjust parallax to ensure a comfortable viewing experience for all scene points.

So, if you can shoot with fixed convergence, where do you converge? Well this is the point at which another key difference is introduced. If you imagine the H3D display as a ‘window’ onto your 3D scene, it is likely much of the action will occur behind the screen but with real depth (just like looking out of a window in the real world!). Therefore the most appropriate point for convergence is on the display plane (or zero/neutral parallax). Once again, ‘scene scale’ is critically important: knowing the total depth of a scene and which part of this scene will be behind the display will help set convergence for that scene. Once set for that scene, it shouldn’t be necessary to adjust it again, even when zooming in/out (as long as the original scale proportions are maintained, parallax can be adjusted).

The clue to why this is less critical is that convergence in Stereoscopic 3D is used to indicate a ‘level of depth’. With H3D, the level of depth is ‘chosen’ by the viewer as they naturally select a scene point in the scene on which to focus. The eyes, then, very naturally converge and focus on the same point (hence no headaches and no depth cue mismatch). This doesn’t mean it isn’t possible to create parallax errors (or painful divergence!) but the discipline here is to ensure that the maximum distance of a scene is always identified and in playback that maximum parallax means that at infinity, your eyes are parallel. This additional information needs to be captured at the point of acquisition for the content (whilst complex algorithms can make a good job of recreating it, the workload could be considerable).

So how is it done?

In essence, the most basic way of achieving this (the variable depth in the scene) is through the use of a Depth Map. In many high end CAD applications and other 3D modeling software, Depth Maps have been used for some time. They are essentially grayscale images which contain the ‘z’ axis data for distance from the focal plane to an object.

With these high end CAD packages, the Depth Map is often 32bit. The good news is that the level of ‘resolution’ required for H3D is lower and therefore an 8bit Depth Map is likely to provide the necessary level of granularity to reconstruct the scene with accurate depth. Many high end rigs are capable of capturing data indicating such information although it is yet to be seen whether or not this includes the Depth Map in the format required. As already described, one of the most important factors in H3D is the ‘scene scale’ and this is what is represented by the Depth Map and the main use of this depth map is for rendering the ‘reconstructions’ during display.

Some commercial organisations have already developed algorithms to recreate the Depth Map from Stereoscopic 3D shoots to enable full depth budget 3D content. These are likely to deliver the Depth Map necessary to experience 1:1 3D reconstruction to be shown on H3D systems.

Although most important for display purposes, as discussed earlier – it is key, where possible, to identify this metadata at the point of acquisition to ensure that the most can be made of it for scaling the ‘reconstructions’ for different size displays.

With this in mind, it is likely to be the case that the data overhead for transmission should be relatively low. As an example, it can be envisaged that Stereoscopic 3D content may be broadcast with the Depth Map as metadata alongside it. For viewers with a Stereoscopic 3D set, it will display in its normal fashion with a limited depth budget. For viewers with an H3D set, it will display perfect Holographic 3D reconstructions.

At least one of the broadcasters of 3D are open to prepare content immediately with that full Depth Map available. No names mention as yet!

So you can shoot at Infinity because you the viewer will choose where the scene point is focussed not the stereographer or the director. Think of that theatre analogy – if you go to the theatre we can all choose to look at different things on the stage.

Stereoscopic 3D compatible

H3D can show stereo content without a problem. The exciting thing is that if you have access to the Metadata on the camera rig then you can re-compute your raw data and re-generate the real depth. With that very same data you can perhaps get a football match that was recorded in stereo with a depth of plus or minus a few inches but after re-computing it which is done on-the-fly – you have hundreds or metres of depth.

Ultimately with H3D you may not need a stereographer because at the moment a stereographer sets the convergence point and will try and steer to what production has decided what the main focal point of the scene.

It will be more important to retain the data from the lens and the interocular distance captured for everything you’re shooting in 3D. Your compositional style will also change and it will be more like looking through a window, or from a box in a theatre.

In the wider media people like James Cameron say that the earliest holographic solutions are at least eight years away – maybe he was quoting the University of Arizona who recently said the same (seven to ten years) and maybe he is protecting his data which is already patent protected. Japan generously promised full holographic playback of football games if they won the 2022 World Cup – alas there was no such promise from winners Quatar.

SeeReal’s Holographic 3D Solution

To achieve quality 3D real-time holography – realistic and comfortable to view – the images transmitted to the eye must be reduced to the essentials. Wasted information is also wasted image processing and this slows down replication and spoils image quality.

So the breakthrough technology developed by SeeReal banks on highly logical yet keep-it-simple solutions.

First of all, rather than boosting resolutions and intensifying display needs to unbearable levels, SeeReal has introduced Viewing Window technology. This actually limits display needs and corresponding diffraction angles.

The benefit: a much larger display pitch, so for a 40 inch display the pixel size is in the range of at 25 – 50 microns. This is well within today’s state-of-the-art.

Another benefit are smaller encoded hologram areas per scene point, with the shape and size of the sub-hologram closely linked to the 3D scene being viewed (sub-holograms are freely super-positioned, to accurately represent any 3D scene point distribution).

Overall, the combination of these two basic principles means that it only takes today’s computing capabilities to create full parallax colour 3D HDTV images in real-time. For example, a 40 inch holographic TV would consume only approx three TFLOPS.

Producing these viewing windows then limits the amount of computing power needed to see Holographic 3D. But what happens when there is more than one viewer? This is dealt with by faster displays and these are slowly coming to market. The information is multiplexed in real time and then doubled and tripled for each viewer.

For a single user you will need a 120Hz display which are the standard now and for four users you need 480Hz displays.

SeeReal’s initial designs with manufacturers in Asia will be able to serve four to five viewers in the first generation of panels. Of course for PC monitors you never need more than one or two so the gaming world will be one of the first industries to benefit from Holographic 3D.

So the key here is the eye tracking technology that SeeReal already had which then allows for less computing power to serve up full resolution holography to four or five viewers. A typical Hologram is about 3-4mm across.