The new digital puppet masters

Posted on Jul 29, 2019 by Julian Mitchell

Game engines are disrupting the media industry – but really, platforms like Unity are just another tool set

Words Nicki Mills / Pictures Unity

If you look at the process of making a film from start to finish, you begin by sketching your ideas out with storyboarding, for instance, and timing them out in an editing program – to the very end, when pixels are on the screen in a movie theatre. People are using Unity in myriad ways within that spectrum, and for content like episodic animation it can be start to finish. Adam Myhill, Head of Cinematics at Unity, highlights a recent animation series. “The Baymax Dreams project that we did for Disney, which features Big Hero Six, the big puffy robot, we did that start to finish within Unity and it was all about real-time animation. But not everybody is going to do that, for Blade Runner, The Jungle Book and Ready Player One for instance, Unity featured in a much more virtual production environment.”

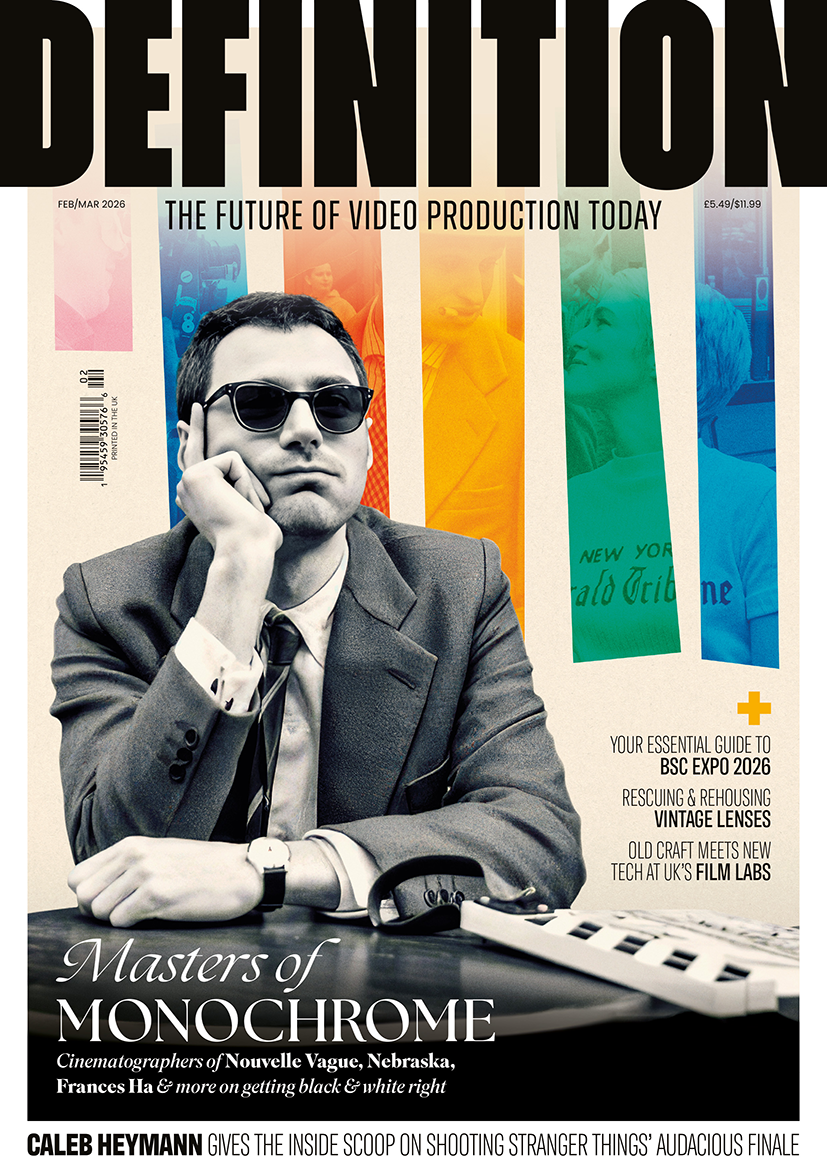

Adam Myhill, Head of Cinematics at Unity, believes the system makes for better filmmaking

Adam Myhill, Head of Cinematics at Unity, believes the system makes for better filmmaking

Sharing environments

In these movies, the director and multiple people were sharing an environment. “For instance, if you’re on a laptop you can see everything and the director’s wearing a VIVE VR headset, and someone else is on another machine. You can puppeteer experiences live, so someone’s animating the gazelles running to the left and the director says, ‘can we move the shadows on the left a bit more because we want the shadows to rack across the front of the camera, and also why don’t we move the mountains a bit as well?’.

“What we’re finding is that directors are feeling they have never, in CG, been so close to their characters because in a pure CG world each shot is done by a different layout person, the animation is done differently from the lighting, and you don’t actually see everything until the end when it gets in to compositing.

“So we bring all that forward, which allows you to have a finger in every department. If you want to edit, move a light, change a lens and do colour grading, you can do it all at the same time because they’re all right there, as it’s all real time and it’s all in one program.”

Unity’s short film Sonder combines 2D animation with the richness of 3D environments

Unity’s short film Sonder combines 2D animation with the richness of 3D environments

Massive change

The impact of this kind of change in production is huge and will affect the whole industry. “This is a media revolution. I might be biased, but it’s true. It will have the same impact as the move from film to digital cinematography, in terms of changing what happens on set and changing the equipment. Here’s an example: you’re doing a VFX shot on a film, let’s say you’re comping in a dinosaur. The traditional way of doing this is that the director and the DOP and everyone is on set, and they’re saying, ‘the dinosaur is going there’, and they close their eyes and visualise what it will look like. Then a VFX supervisor will measure where the camera is and everything else in the shot, and they will go back to the CG house who will look at the photos and the measurements, and will build what they need to build and then make the dinosaur and comp it in, probably cleaning up some stuff to make it look as real as possible.

“What we’re seeing now with every new version of Unity is the increased quality of the graphics “

“The director is then seeing it maybe three weeks later from the initial shot – and realises that the dinosaur should have come in from the left and not the right. What we do is allow the dinosaur to be there in the set, so you’re there with an iPad – you see the dinosaur on the set, literally where you want it. You can then change exactly what you want.

“What the CG houses are saying, and this is a quote from Digital Filmtree in LA: ‘More money is hitting the screen because now, when we build something, we know that it’s exactly what they want because it was already tried out on set’. They’re very happy, as the time is shorter but the quality is higher. These are tools that people shouldn’t be afraid of because once they try them out they’ll see that they are so transformative.”

Real time

The work that Unity did with The Jungle Book was based on proxies with basic shading, but it was still great to see everything. “What we’re seeing now with every new version of Unity is the increased quality of the graphics, including recently real-time ray tracing. So the quality of the real-time representation of these models is getting better and better.”

Unity are at present talking and working with all the main content producers, including companies like Netflix who are on course to embrace the virtual production world – but it’s not all about saving money, “We initially thought that saving money was going to be the huge thing, and it is for some smaller studios, but for the larger studios it’s not all about saving money. They want the savers to allow for more time developing stories so the content is better, they feel that they can get closer to the characters and experiment. You can see if jokes are working and the timing is right; you have more choices – ‘what if we shot this with an overhead or changed the edit on that?’.

“Neill Blomkamp on Adam 2 quite famously changed the lighting on one of the shots on the last day from a noon sun to a four-o’clock-in-the-afternoon sun. It was about 2:30pm when it had to be done, which in traditional CG is beyond laughable but it was done on time on the day.”