Shooting And Editing Life Of Pi

Posted on Feb 19, 2013 by Alex Fice

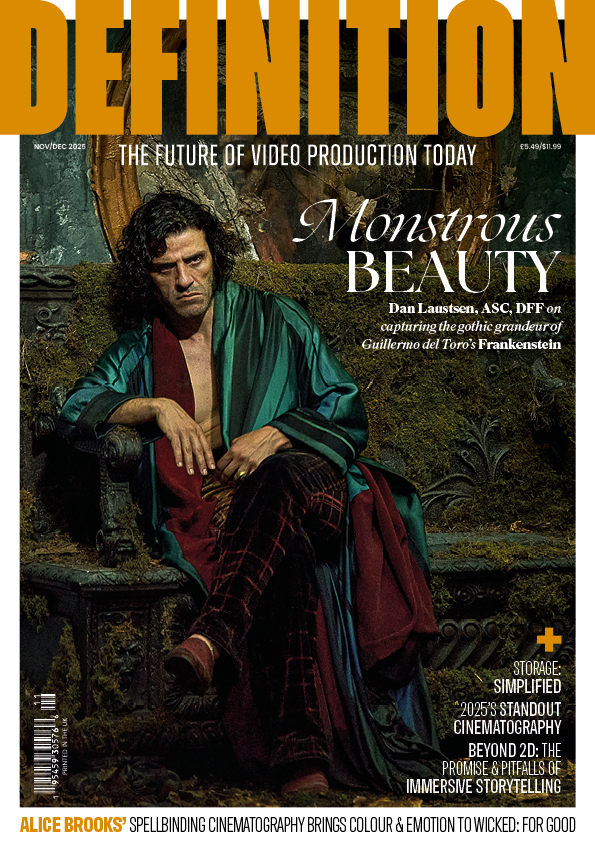

Pi in the tank with a CGI Richard Parker.

Capturing The Golden Light – DoP Claudio Miranda

The production shot with six Alexa cameras paired on three Cameron Pace Fusion rigs with ARRI / Zeiss Master Primes. The uncompressed HD data was recorded to Codex recorders. The result is a remarkable cinematic accomplishment that enhances the viewing experience and immerses audiences in a sea of breathtaking imagery. Here DP Claudio Miranda talks about materialising images and emotions on a grand scale for Life Of Pi.

“Life Of Pi is naturalistic and appropriate for the time that we were trying to shoot. The look has a kind of a golden hour, magical feel, which reflects on the story itself. There’s a great, soft feeling to it. It wants to draw you in. At times there are more realistic environments. You feel like you are taking this journey with the main character, Pi. You feel like you are with a boy and a tiger.

“I did some early tests with other cameras. We needed strong, controlled highlights. Normally, sunlight reflecting on water is a pretty big digital issue. We shot off the Venice Beach pier with the camera very low to the water. The Alexa was the only camera that didn’t feel electronic in the highlights. That’s pretty critical to the story, with all of the highlights going out of control in the reflections and with characters really close to the water. This was really important to get a handle on. It was a landslide why we chose Alexa. It was obvious very early on that was our camera.

“I’m a really big fan of low light and being able to get as much light out of practicals as possible. The low light sensitivity of these cameras is pretty amazing. We wanted to light a whole pool and the art department brought in about 120,000 candles for this night scene. It was shot mostly with available lighting – not completely, but it could have been. We had a fraction of lighting in the background, in the trees to give a little depth. It was low light and these cameras are so sensitive nowadays, you can capture this kind of scene with just candles. We shot everything at 800 ASA. The candlelit scene is probably my favourite in the whole film. To be able to retain those highlights and light people’s faces, that’s a pretty impressive camera to be able to control that. It was a really fantastic scene to have everyone pitch in and create this magical event in India. It was stunning.

Building The 3D Edit – Editor Tim Squyres

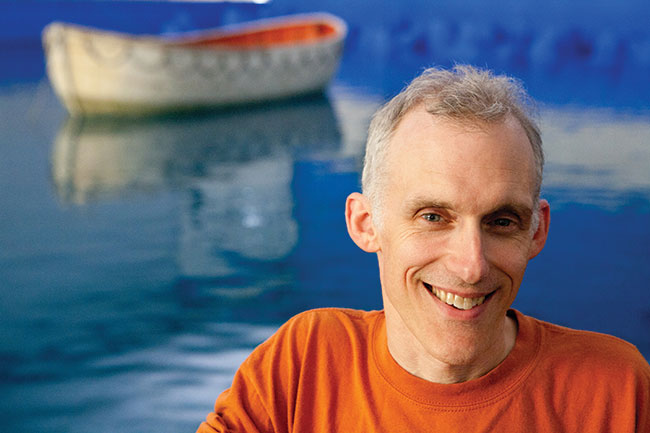

Life Of Pi Editor Tim Squyres with the wave tank behind

Life Of Pi Editor Tim Squyres with the wave tank behind

“We shot in Taiwan and in India and we had a digital lab that went with production. We had the lab set up in Taiwan at an abandoned airport where we were shooting, mostly in the terminal building. Also that’s where we built our wave tank and we were 50 steps away from it. I travelled back and forth, part of my time in Taiwan and part in New York, probably a little more in New York.

“The footage would go to the digital lab, go through the stereo correction and then come to us as side-by-side media, we also had each eye discrete. In the AVID we stored the images at DNxHD 115 which is a pretty high resolution, low compression format. We did that because we wanted to minimise compression artifacts. In 3D if the compression artifacts are slightly different in the two eyes, that gets really irritating to look at so we dealt with that by minimizing it.

“So for every frame that we got we got three frames, left eye right eye and side by side, which took up a lot of storage. We cut in AVID Media Composer 5, even though MC 6 has fantastic 3D support. We were Beta testing 6 while we were prepping and most of the shoot but it didn’t come out early enough for us to use it.

“We worked entirely in 3D. Neither Ang or I had ever worked with 3D before. We didn’t want to be cutting in 2D and imagining what it looked like in 3D. Right from the first day of dailies I worked in 3D and didn’t actually see the movie in 2D until about two months ago.

“MC 6 has a different way of setting up the clips so once you start in 5 you can’t really move to 6 as you would have to rebuild everything. In order to re-converge shots we would have to duplicate them, crop out the left eye, crop the two eyes separately and then re-position one of the eyes in order to re-converge. It was a cumbersome way to do it.

“It’s interesting though, when you get into 3D you discover all kinds of interesting things especially in things like dissolves for example. You may have a dissolve that would look perfectly fine in 2D but in 3D you realise that this doesn’t work. Sometimes the out going shot you would have to push back so the film element in that doesn’t wind up in front of an element in the in coming shot. Logically and aesthetically it would be better behind. Sometimes you have to move things around quite a bit and you only discover that by doing it. That’s why we really wanted to work in 3D all the time to get a sense of what we were doing.

“We set up a dailies screening room in Taiwan with a Christie 3D projector and when we were done shooting we took that projector back to New York in to my edit room. In our cutting room we had a 11.5 feet screen and a big sound system and it was actually a very good small screening room.

“There was really no difference between the two cutting rooms in Taiwan and New York. I had two assistants in Taiwan the entire time and I had two assistants and a VFX editor in New York and I went back and forth. The way we had the projects was we had three completely separate ‘sand boxed’ parts of the project. There was an area that the guys in Taiwan did, dealing with dailies, and they would hand them to my assistant in New York and he would hand them to me. We were very careful how we transferred things from one section to another. You just have to be very careful with your workflow. In that way it really didn’t matter where I was, I could work just as well in either place. The cutting room in New York was much better but in Taiwan I was near the set.

“There were a number of things in the film where my presence was needed on-set. For example there was a scene where I had to work with the tiger trainer for a couple of days. Putting half of the scene together and once we had that with the real tigers we had to work out how the scene was going to work. A few weeks later we shot the part with the actor after we’d seen what the real tigers gave us. When we shot the part with the actor I was sitting in the boat narrating the scene for the actor and the animation supervisor who was swatting the stick and pretending to be the tiger.

“So for a lot of things like that it was very helpful to have me on-set. But in Taiwan I was getting called out of the editing room all the time, in New York they were asleep in Taiwan while I was busy cutting.

“On this film it’s very difficult to shoot 3D and it’s really difficult to shoot on water. Water films are notorious for going way over schedule but we didn’t. It’s also difficult to shoot with animals and it’s difficult to shoot with kids and we were doing all of them in the same movie!

“What’s great about having the on-set lab is that if you need things rushed you can do it. Your lab essentially is working only for you and not juggling you with other jobs. If we needed to change things, if we needed to get stuff re-graded it was all relatively easy because the guys were right there. Another great thing we did when I really needed to turn things around fast was to import the QuickTimes from the video assist and cut them in. Obviously that was 2D but there were some instances where they had got a scene but were not sure whether they needed to go back to this set-up again tomorrow or if they could move on. I could get the video assist and cut it right away and give them an immediate answer.

“When we shot in Montreal for a week I was on-set all the time and I had the AVID networked with the video assist drive so I could get those ProRes files as soon as they called CUT and import them immediately. So I was cutting as they were shooting, what was really good about that was that I was cutting it to go in to pieces that were going in to existing footage and so dropping it in people could see if we’d got it and then move on. For doing complete scenes it’s not really that useful. But for doing bits and pieces and pick ups it’s fantastic. In Media Composer 6 you can import ProRes and it’s very fast.

“Editing with glasses was a new experience for me and Ang. We decided to go for passive glasses mostly because there are no batteries in them. Active glasses are a little bit bigger and heavier and if you’re wearing them 10 hours a day comfort is more important than anything. But I went home with a lot of headaches especially early on. When we were shooting our scenes with Pi and the tiger in the boat in the ocean, it was the actor with no tiger and no ocean so right away we in editorial started putting in tiger and backgrounds. Usually I would do my first pass on a scene without a tiger, just imagining it. We had a bunch of tiger animation from the PreVis given to us on green so we could edit it in to our shots and track it ourselves. So I would have tiger in the shots almost immediately and we would put backgrounds in also. So by the time we screened the assembly all the tigers, oceans and skies were in. They weren’t good but it was enough to tell the story. But 3D has a lot of problems with this so that didn’t help with the headaches either.