Why Stereoscopic Editing Is Virtually Non-Existent

Posted on May 31, 2010 by Alex Fice

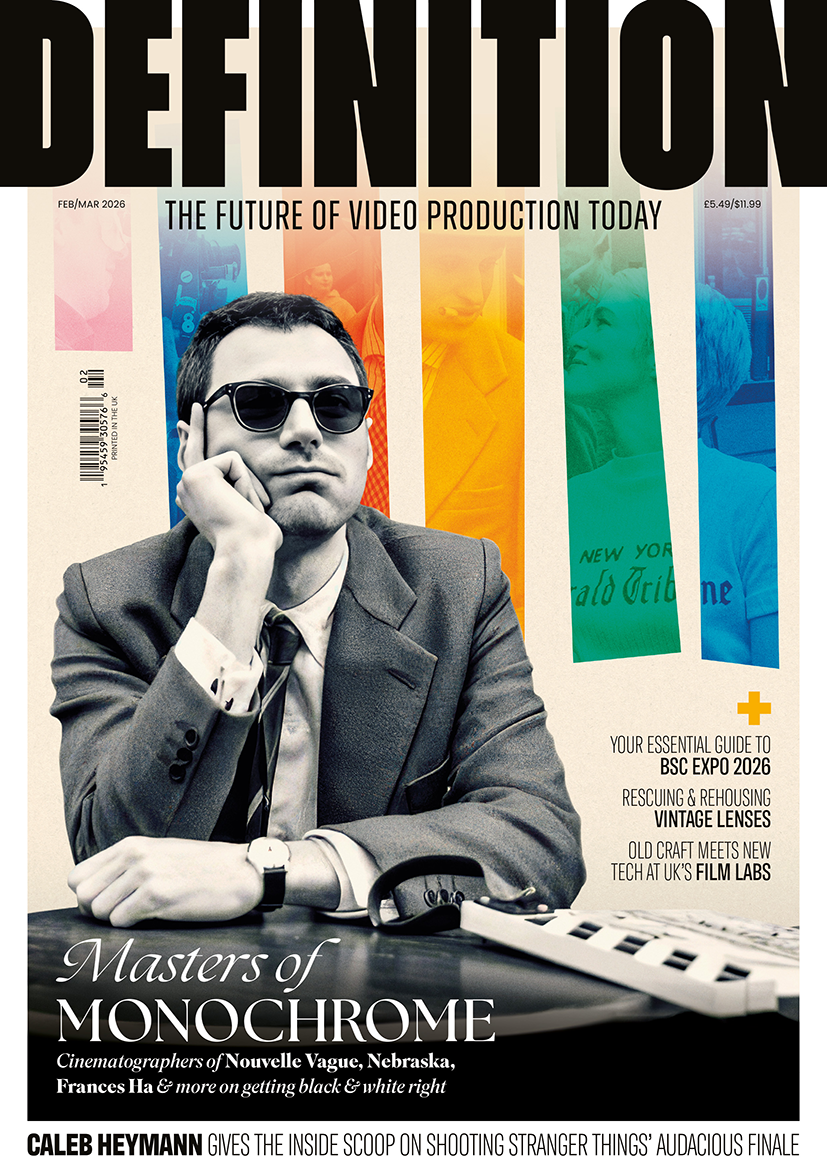

A Final Cut Pro window running the NEO3D plug-inOffline Editing in 3D was always possible on non linear editing systems, at its most basic level you’re just asking your system to play two simultaneous streams of video. However most of the editing manufacturers have been waiting for 3D to attain a critical mass before producing a whole ‘end to end’ solution from ingest to play-out which will be able to import data from rigs, allow alignment and other correction and carry post on to the grade passing on all the metadata as it goes. Cineform’s plug-in solution, Neo3D, is perhaps the only bespoke editing solution on the market at the moment, although this time next year it will be joined by many others.

You could argue that 3D could have been in and remained in the domain of the blockbuster animation movie and the occasional Avatar thrown in for good measure, but broadcasters are now lining up to provide their own content channels and in the case of Discovery their own products – they are currently helping Sony produce a 3D camera solution similar to the Panasonic 3DA1 camcorder shown at NAB.

Now that the broadcasters are headed in a similar direction, editing companies have to start putting 3D features high up on their ‘to do’ feature lists.

Lawrence Windley from AVID argues that AVID systems have been able to handle 3D for a while: “What we are seeing now are Sky, ESPN, Canal+ and others all launching 3D channels and wanting a more finishing for television type of 3D workflow solution. We also need to support the new cameras from Sony and Panasonic that are coming out this summer. What everyone says to us is that they don’t want to go back to an offline/online workflow, you don’t really want to conform in a Pablo or Mistika, those kinds of systems. There are some 3D finishing systems out there that you can’t edit on but you can grade on, so it’s now a question of joining everything up with the offline or do everything in full res.

“A lot of the enablers of what we need to do are in version 5, like the native support of the RED camera format. The important thing is that you are editing in context so in the GUI you are able to trim stuff, do dissolves and then see whether it works or not in stereo. That all assumes that the two images are correctly registered.

“In the market what’s happening now is that the actual requirements are getting locked down in the sense that Sky will be transmitting side-by-side, it’s going to be on an HD screen and in the living room. From a manufacturers point of view as in ‘what we need to support in the software’ you then know it’s going to be shot like this, end up there so how much do we need to do? You can get in to the details physics of it, which probably aren’t relevant to bread and butter broadcast stuff. So it gets a lot easier to then say lets build some tools in a workflow that support ‘this’. A lot the work done on the rigs creating the footage in the right way probably mean the correctional tools you need now are less extreme than they were before.

“The broadcast TV channel requirements are very different from the say an ‘animation film company’ because you start to think about how you make promos where we might be inter-cutting lots of different footage. How do we caption it? What are the conventions on that? You need different tools like easing cuts in and out, changing stuff across cuts, you need to do that simply and easily. Generally the requests we get from customers about 3D boil down to a small number of quite simple things to do.

“Broadcasters say to us the solution has to be simple and fit in to the existing architecture. It’s got to work the same way as ‘mono’ would, once you put other things in the workflow it just gets very difficult to manage. Not to say you can’t turn off one eye to become your standard channel output.

“The way you cut stuff is different with stereo, you tend to linger longer on the wide shots and have not too many cuts on action sequences. You can lose track of what’s going on in that way. Generally fast cutting doesn’t work. So you can’t necessarily say ‘Lets output the right eye for the mono viewer’ as it will be a boring experience, people will think ‘Why do have all these wide shots?’.

My Therapy

One London-based facility is collected a huge knowledge bank in editing and handling 3D stereo in post is My Therapy – Dado Valentic explains what their process covers: “I can explain to them what works better in post production but really we can handle anything – parallel rig, mirror rig, P+S rig, raw files and all of that. The first thing that I would request is for them to do some colourimetry test shots. So we would basically give them a test chart and they would have to shoot the cameras without a mirror, through the mirror and reflected off the mirror and then we would do colourimetric testing and we would create 3D LUTs that would balance those two cameras. So the colour balancing between left and right eye would happen straightaway.

“Then the next thing we would do is to decide on how we edit and how you want to review the rushes on set.  CINEFORM’S NEO 3D: The First Light window that shows many of the colour and 3D controls visible. The image in the preview windows is a 3D clip shown in side-by-side mode. We don’t want to duplicate this step so if you’re going to review rushes on set we would create editing dailies and view those on set. Or we can we’ll do a 2D edit.

CINEFORM’S NEO 3D: The First Light window that shows many of the colour and 3D controls visible. The image in the preview windows is a 3D clip shown in side-by-side mode. We don’t want to duplicate this step so if you’re going to review rushes on set we would create editing dailies and view those on set. Or we can we’ll do a 2D edit.

“For this we have the same set of rushes as they do in our facility – they leave their sequence in their ‘dropbox’. We open that sequence and re-link to the stereo rushes and then we watch it in stereo. Very often we have found that after seeing your cut in 3D the director and editor would decide to re-cut because they would see a scene in 3D and want to extend it. If something looks really boring in 2D it could look very interesting in 3D because your eye is spending more time discovering the 3D space. There is a lot of tweaking that people like to do after they’ve seen something in 3D. So you have to allow your self this reviewing/recutting process if you’re working in 2D and reviewing in 3D.

“Then we basically conform the shot and then we start on the stereoscopic adjustments using an Iridas PC-based system which is a real time system, there is no rendering involved.

“I can do all my colour adjustments in Speedgrade, vertical and horizontal misalignments and corrections and my stereo parallax adjustments.

“We are fixing misalignments even in the rushes stage, you want to know if you can really use the shot or not and this is where we discover if there are any more problems with it or not.

“Very often you can try to align things by eye, a little bit up and a little bit down, which is OK and is how we’ve been doing things so far but what we always do is to take the cut in to the cinema with a DCP that we’ve created and see if it is holding on the big screen or not. You really have to see it on a very large screen even more with 3D.

“We have learnt that doing it by eye is OK but there is a human factor involved so we have developed our own software for alignment. So we basically let the computer align the pixels and give us geometric values that we then enter and we get the real time alignment but it’s the software plug-in that is aligning shots for us.

“The algorithm that we use originates from Panoramic photography stitching. We use a translation from that use. That is very fast as well.

“We then put the shots back on the system and do the final grading and then from there we create masters. These masters then get encoded in to a 3D DCP and that again goes in to the cinema for viewing and we can come back for further correction if we need to. Usually we do about three passes before the job is finalized.

“It depends how good the stereographer was to how long the post will take. For example we’ve done some work with Phil Streather and he’s been incredible – most of the stuff I learnt about 3D was from him. When he does a job we get rushes that are in such a good state that we can do quick turnarounds.

“Other rushes aren’t so good and we have to spend a lot more time on them, that’s why we came up with the alignment software”.

My Therapy use Cineform’s NEO 3D for editing which has become the hub of what they do even though the software is still in development.