Sky’s the Limit

Posted on Jul 21, 2024 by Samara Husbands

The height of virtual production

Adrian Pennington speaks to the experts, discovering how to create spectacular skyscapes using virtual production

Whether you want to fly among the clouds or capture the perfect golden-hour sunset, virtual production environments can deliver convincing, meteorologically accurate (or even science-fiction fantasy) skyscapes.

Getting it right means ensuring you have chosen the right content pipeline for the project. “For a beautiful generic sunset, a 2D video playback content pipeline may be best,” explains Joanna Alpe, chief commercial officer at Bild Studios and MARS Volume. “You can capture this at high resolution with a camera and play it back with agility on an LED volume. If the scene calls for a more dynamic range of action, and your director needs more flexibility to control action sequences – such as planes flying across the sky and explosions occurring – 3D playback with scenes built in a real-time engine is the best tool for the job.”

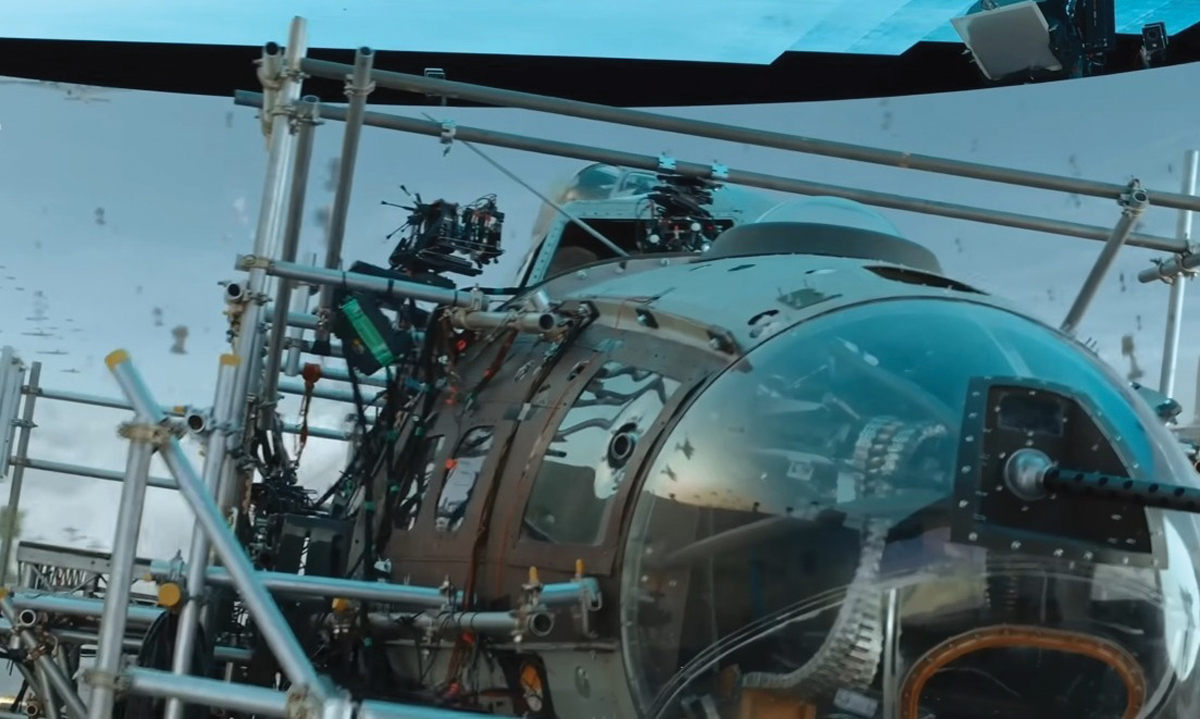

Recent productions such as Masters of the Air have been important in proving what’s possible. Both Bild Studios/MARS and teams at Dimension worked on the Apple TV+ drama. “For flight scenes in the sky, you have a motion base moving your set piece around,” explains George Murphy, creative director, Dimension and DNEG 360. “The environment is genuinely surrounding the actors and filmmakers. They have the freedom to move through it and the environment reorientates to their movement. We’re able to immerse the actors and filmmakers in a world with natural reflections on surfaces, in characters’ eyes and in glass.”

Masters of the Volume

The workflow for creating skies in Masters of the Air involved integrating all available Unreal Engine sky-related techniques and systems, along with custom-made solutions to render convincing dynamic skies for the aerial battle scenes.

James Dinsdale (VP supervisor) and Chris Carty (senior content generalist) at Dimension/DNEG 360 helped merge these tools and systems into one larger asset, allowing for complete control and fast iteration when running the scene on a volume.

“We based our approach on an art-directed high dynamic range image (HDRI) projected onto a sphere 100km across – so the distant sky moved with the correct parallax effect,” says Dinsdale. “We layered in effects like atmospheric height fog and hazing to blend the horizon and integrate with the rest of the sky. We crafted fully volumetric clouds within each scene by using 3D volumetrics along with masking layers and extra custom tools and interfaces.”

This novel workflow was crucial for key moments, like the B-17 bombers dipping in and out of clouds. “It ensured the skies existed in a grounded and consistent space along with the other assets and planes in the scene, allowing for natural interaction in camera,” he adds.

Typically for big, open skyscapes you won’t miss the parallax 3D scenes (CGI) will bring, as the objects are often not found in the close foreground. Two-dimensional or background plates are cost effective to capture and photoreal out of the box, so often the most suitable as final-pixel assets for skyscapes.

“Productions would capture a plate array from a physical location, these would be stitched to create a seamless 270° or 360° plate which is then played back on the volume,” says Alpe. “This approach has been used at MARS Volume for rooftop scenes, backdrops for set-built walls with windows and helicopter travel sequences.”

An exception to this was its work on Masters of the Air – its explosive aerial action required animation sequences and timed explosions happening in the foreground. In this case, 3D scenes were the best pipeline.

“One of the goals for Masters of the Air was making it historically accurate, and virtual production allowed us to do that,” says Alpe. “Weather data, sunrises and sunsets could all be reconstructed in the real-time engine with historical accuracy.

Virtual cinematography of skies played a vital role in delivering natural reflections and performance flexibility – allowing director and actors to see and respond to the aerial dogfights and explosions in the action in real time.

“The Unreal Engine scenes were painstakingly created with this degree of care and attention. We were able to play them back to the LED volume and build tools that gave the directors maximum flexibility, for timing action sequences and what they would see on the set, in camera, at any one time.”

Augmented with practicals

If you need to get the sun in shot (or any kind of directional lighting), you need to augment it with practical lights. “A limitation of LEDs is in generating hard, crisp light, but they’re very good at general directional and ambient fill, with natural colour and immersion,” comments Murphy. “For aerial shots featuring movement through clouds, you’d need to replicate fog on-set, which is possible but requires forward planning.”

For the VR worlds of Netflix sci-fi hit 3 Body Problem, the team filmed against a large 180° wall consisting of ARRI SkyPanel LEDs filtered through and hidden behind a Rosco scrim.

“Our board operators could control any kind of colour we wanted,” explains Richard Donnelly ISC. “This enabled us to light the actors precisely – for instance with the sun rising – instead of being led by VFX. We augmented the set with many other lights but, essentially, we lit the actors by the wall. It’s almost the reverse of volume capture where you use plates filmed on location to light live action.”

The subtlety of balancing practical lights with the skyscape environment generated on a volume ‘is an art form’, says Alpe, that sets an experienced DOP apart from the rest.

“The ambient lighting from an LED volume, as reflected on the skin tones of a person, can sometimes look different to what you would expect by being outside,” she says. “When working in a real-time environment, it’s important for volume control teams to take the time to share lighting understanding with the DOP, to empower them to fully understand exactly what they have control over in the scene. It is through the strength of this collaboration that DOPs can be set up for success on-set.”

LED screens featuring high dynamic range (HDR) are becoming standard, which means they produce a variety of intensities that feel more natural. “When you’re framing something up in camera, even if you’re supplementing it with practical lights, it can feel like those intensities and exposures and colour saturations are realistic. Once cinematographers begin to trust that, they’re becoming more confident in what they can achieve.”

A good VP team will be able to advise on the best approach that will suit the production and director needs.

Ever skyward

“There’s some unusual phenomena in the skies – from rainbows to light shimmering through rain,” concludes Murphy. “Recent developments in ray tracing are enabling us to accurately capture the refractive nature of light through atmospherics in camera. We’ll see much richer clouds and skies on demand, which are more photoreal and detailed.”

This feature was first published in the August 2024 issue of Definition.