Feeding The Thin Client

Posted on Dec 23, 2016 by Julian Mitchell

The days of local infrastructure for post studios are numbered. Studios in the cloud are here. While some M&E companies are dragging their feet, some like Amazon and Google are investing millions.

Words: Ben Dair

A couple of years ago market research company Frost and Sullivan stated that cloud solutions were slowly replacing ‘on-premise’ solutions in every industry across the globe, but in the media and entertainment industry they said, “Solutions for the cloud cannot completely replace ground counterparts.”

“The current situation in the market,” they said was characterised by “… considerable confusion in the difference between private cloud, public cloud, SaaS. This lack of awareness that exists at present is also a reason for the low adoption among media enterprises.”

It has been over five years since cloud-based technology entered the post-production market. In the early days, rendering in the cloud was pioneered by start-ups and used by small- to medium-sized production and post-production companies for content for review and approval. In 2016, the cloud is now being used more seriously by larger facilities and companies – ideal for times when 80,000 processors are not enough!

Hopefully, by now most have a fairly good understanding of the benefits of using the cloud. The term has always been nebulous, a perception of something that isn’t tangible. The reality is very different, the use of cloud technology is very similar to existing network switches and servers used on site. The key difference is, the allocation of resources has been honed and optimised to use cloud-based hardware for almost 100% of the time. Whereas most desktop computers are hardly used in real terms. Render farms are the exception as economic benefits of using an on-site render farm are only achieved through maximum utilisation.

Cloud technology and services have expanded in all areas of production, post-production and delivery. Fundamentally, the key to using cloud services is connectivity and high capacity (1Gbps and more) connectivity when heavy lifting. The exception to this is, if content and processing are already in place prior to loss or a reduction in connectivity. Various dedicated network providers allow the connection to burst for a short period of time and then return to the base bandwidth, eg. Sohonet, euNetworks.

We see cloud services employed in almost every aspect of glass-to-glass and digital-to-digital supply chain, more in some areas than others, and recording directly to the cloud is mainly a live TV/stream application. Expect to see more in this area as there’s been a flurry of acquisitions by Google (acquired Anvato) and IBM (Clearleap and UStream).

The biggest growth of cloud-based services is in rendering, predominantly CGI-based rendering, but 2D rendering and processing is also a growth area. At the recent HPA UK Retreat there were several sessions that discussed the practicalities, implications and benefits of using remote rendering and rendering as a service (RaaS).

What’s Available In the Cloud?

There are now many different services and the following is an overview of typical cloud-based services:

• Remote local servers based on client needs

• Ingest/transcoding/creation of proxies

• Archiving/storage/content distribution

• Encryption and watermarking of footage

• Remote CGI rendering

• Monitor usage and provide activity logs

• Upload/download/streaming of material including mobile devices

• Calendars/email notifications/watch folders

• User logging/annotation/comments of dailies or cut sequences

• Online application for collaborative viewing/logging/editing

• Support for Application Programming (API)

• Database search functions

Anyone who is new to using cloud technology will be dazzled by the number of different options and entry points. There are several categories of service that are connected via dedicated private or public Internet connectivity. Most network connections (albeit dedicated private or public Internet) are protected via a virtual private network (VPN).

• Infrastructure as a Service: generic elastic compute and scalable infrastructure provisioned by either Google, Amazon or Microsoft where users can select a predefined configuration including vCPUs, memory and storage. Most of the services also provide the ability to create customised VM profiles. Used by medium to large facilities and studios requiring bespoke configurations and workflows. Typical on-premise to cloud ratio being at least 80:20, but this can be as low as 60:40.

• Rendering as a Service or Transcoding as a Service: turnkey rendering/processing where all resources and licences are provided for a per minute or per hour fee. For example, Zync Render, Rebus, Rendercore and Elemental/Amazon Transcode. Used by small to medium facilities and studios that require resources for a short period of time aligned to the bookings. Typical on-premise to cloud ratio, 40:60 and new start-ups have an on-premise to cloud ratio of 10:90.

• Collaboration as a Service: value added collaboration platforms that include all resources and professional services designed to visualise and optimise the workflow or pipeline. For example, PIX Systems, DAX Production Cloud and Sundog Media Toolkit. Used by any scale of business that requires efficient creative collaboration.

Other adjacent services that can be utilised in the cloud are subscription-based software licences and Platform as a Service, eg. Adobe Anywhere and Avid Sphere, Media Composer Cloud or Forbidden Forscene. Interestingly, both Adobe and Avid use a hybrid model that utilises both private and public cloud infrastructure. In the case of Adobe, GPU processing is used to transcode content for remote delivery.

Why Cloud is good for post

For post-production using the cloud provides a quick way to scale without having to consider capital expenditure and the commitment of long-term maintenance of equipment. For small to medium facilities using cloud-based services provides the ability to compete for jobs that they wouldn’t normally be able to pitch for. But, talent is still the main focus for most facilities. Hence, it doesn’t matter how many resources and processes you have unless you have the talent to use them.

It is not surprising to learn that the primary barrier for using cloud-based services is connectivity, but it’s not as simple as that. Connectivity in terms of bandwidth, latency, consistency (reliability) and network contention (number of users per time) are the major considerations.

The higher the bandwidth, the better in terms of throughput. Latency is typically the response time and round-tripping of packets of data within the network. Latency is usually bound by the physics of the speed of light, network equipment and distance between points. The lower the latency, the better for pipelines requiring good response times, ie. remote desktops and high-quality dailies playback. Consistency and reliability are fundamental to reduced pipeline bottlenecks and efficient workflow. Low contention is required to ensure bandwidth is guaranteed, not shared.

The Mill has been working with a cloud for many years. Twelve years ago people wanted to see QuickTime with respect to client review. This was when The Mill moved to the cloud so that you could see ads and do reviews. Now in 2016, The Mill is rendering in the cloud with 300 nodes distributed around the world using both Google and Amazon.

Roy Trosh, The Mill’s technical director comments: “Data and licences are centralised in London and then distributed around the world as required. Eventually, this will flip to all the data, workstations and licences residing in the cloud. Once this happens the resources will be distributed to wherever the artist is, albeit in the facility or bedroom. This will happen within the next three to four years.”

For commercials, you still need to have the client-facing services and experiences. What changes is where their resources reside. Pay as you go charging can be easily passed onto the client. Space and power is constantly challenging, especially when moving to new premises. Networks are becoming quick and latency has improved. Facilities are still after an office and presence (for client attend situations), but the technology doesn’t need to be on site.

How does it work?

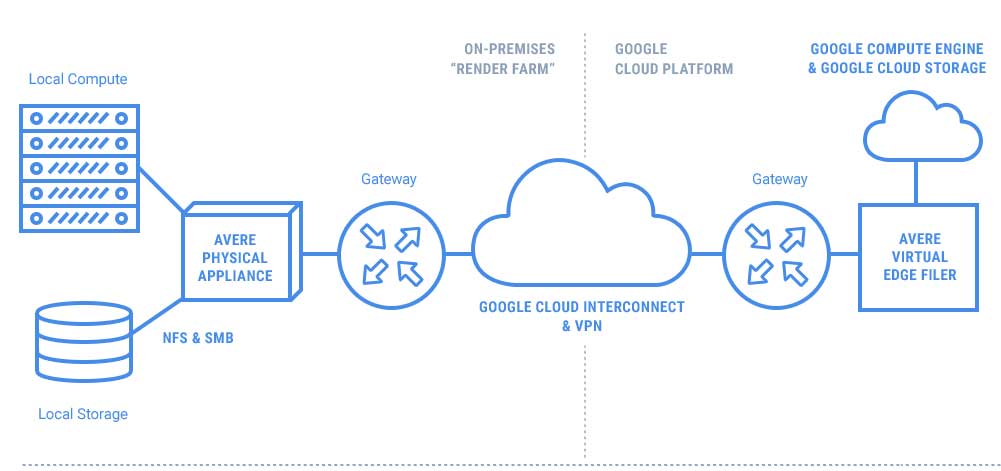

Steve MacPherson, CTO, Framestore explains that pooling resources helped them overcome the volume of rendering needed: “Framestore’s infrastructure needed an overhaul six years ago. We did the rebuild in the run-up to the movie Gravity. Within the London base, physical space was the main challenge and it was clear the volume of rendering outstripped the site resources. Framestore connected to Cinesite and Double Negative via Sohonet 10Gbps and set up a VLAN for the additional rendering. Collaborating with partners like these broke the barrier and highlighted that we don’t have to own everything. Years later we had a similar problem and started using Google to get the additional burst resources.

“With cloud capability we have a greater granularity of the cost of a job through cost analysis and again this is passed on to the client. With security, data is meaningless until the images are transferred. The connections are secured via hardware-provisioned VPN.

“The thing about the cloud is, putting content and data there is cheap, ie. the ingress costs are low. If we cache the data in the cloud, this can improve performance significantly – ensuring that rendering or processing happens as close as possible to the data. The challenge is keeping the content safe and secure. The other consideration is that you can lose a sense of control by putting too much in the cloud. We are performance obsessed and we need to have the telemetry and metrics to manage pipeline and process. We always have the ability to support 60-80% capacity without having a network connection.”

According to Todd Prives at Google, the practical areas of concern are licensing, security and workload. “Connectivity is important, but not the only aspect of using cloud-based services. Workloads and the number of jobs to be completed within a particular timeframe are also a consideration. With latency sub 10ms as between London and St Ghislain, Belgium (Google’s major European datacentre) connecting NFS mounts using a variety of interfaces including fibre channel is possible.”

Network latency is the term used to indicate any kind of delay that happens in data communication over a network. For higher latency connections around the world, asset synchronisation and management are key to using cloud-based services. Basically, assets used for rendering, eg. very large textures and images, need to be transferred to a cloud platform ahead of time. So, when there are new iterations of the scene, only the lightweight changes are synchronised prior to the rendering as all the other assets are in the cloud.

Todd Rives explains how the assets are managed by Google: “The Deadline Render manager is typically used for intelligently managing heavy assets during pre-staging, pre-production or production. The transfer process is usually completed in the background. Large assets are usually rendered locally and then synchronised to the cloud. The size of a large rendering asset is typically more than 2GB. Therefore, during production only the CG scene and recently created assets are uploaded to the cloud platform.”

Google have two offerings for rendering in the cloud, Google Cloud Platform (GCP) and Zync Render. Zync was acquired in August 2014 and originally used Amazon Web Services for cloud infrastructure. Since then, Zync has been converted to use GCP. The only major difference between GCP and Zync Render is that Zync is a turnkey solution for small to medium FX shops and facilities. Larger facilities that require a more bespoke platform use GCP.

Using a virtual machine (VM) as part of Google Compute is not the only way to provide additional rendering services. Many companies are now using preemptible VMs whereby VMs can be rented for a short period of time up to 70% cheaper. The downside in using preemptible VMs is they can be clawed back in to the GCP as demand increases for non-preemptible VMs. Nevertheless, recently Google has reduced the price of preemptible VMs by 33%.

Amazon also have their version of preemptible VMs, called Amazon EC2 Spot Instances. Whereas the pricing for Google preemptible VMs is fixed, Amazon Spot Instance pricing is based on market demand and hence the price fluctuates. With the Amazon Spot Instances, users bid for resources and they continue to use them until they are outbid or the market prices exceed the bid price.

Both Google Preemptible and Amazon ECS Spot Instances have limitations. Neither Google nor Amazon guarantees the availability of either preemptible or Spot Instances, even after they are launched. Google doesn’t provide any notification. Amazon only provides a two-minute warning as part of the Amazon Spot Instance Termination Notice allowing for a more elegant shutdown.

Does the cloud save money?

In the short-term cloud-based services do save money. The main reason is, there are no capital start-up costs including servers, air-conditioning and space. The only start-up cost is in obtaining sufficient and secure network connectivity. Over a three-year period buying your own servers and infrastructure is still cheaper, but has fixed capability and has at least a 20% overhead cost on the original asset purchase.

Roy Trosh again: “The cost to buy a node and amortise that over three years is still cheaper than the equivalent node in the cloud over the same utilisation. Multi-site is the biggest issue and the resources follow the sun. Commercials are a bit rock ’n’ roll. Cloud provides the scalability and flexibility for a job. The VFX supervisors now know that you can set up 100 nodes with a click of the button. The OpEx model does give smaller or start-up facilities an edge to some degree – but the focus is really on the talent and not resources.”

Where are we going?

It is obvious that compute albeit on-demand and preemptible/Spot Instances are going to be used more and more for applications requiring a large volume of compute capability at short notice. For more established pipelines, cloud rendering or processing is going to continue to be more of a hybrid model, mixing on-premise assets with either private/public cloud facilities.

Glossary of terms.

API Application Programming Interface, a set of functions and procedures that allow the creation of applications which access the features or data of an operating system, application or other service.

CapEx A capital expenditure is money invested by a company to acquire or upgrade fixed, physical, non-consumable assets, such as buildings and equipment or a new business.

IaaS Infrastructure as a Service, this refers to online services that abstract the user from the details of infrastructure like physical computing resources, location, data partitioning, scaling, security, backup etc.

OpEx An operating expense, operating expenditure, operational expense or operational expenditure is an ongoing cost for running a product, business or system.

PaaS Platform as a Service, when cloud providers deliver a computing platform, typically including operating system, programming-language execution environment, database and web server.

RaaS Render as a Service, this refers to online services that provide elastic compute, storage and software render licences to support cloud-based rendering.

VM Virtual machine is an emulation of a given computer system. Virtual machines operate based on the computer architecture and functions of a real or hypothetical computer, and their implementations may involve specialised hardware, software or a combination.

VLAN Virtual Local Area Network, any broadcast domain that is partitioned and isolated in a computer network at the data link layer (OSI layer 2).

VPN Virtual Private Network, a private network that extends across a public network or Internet. It enables users to send and receive data across shared or public networks as if their computing devices were directly connected to the private network.

Ben Dair is MD and principal product manager consultant at Dair Design and Consulting, specialising in strategic product management, software development, user experience development and cloud infrastructure.