Round Table: Broadcast Technology

Posted on Oct 30, 2024 by Samara Husbands

What’s next for broadcast production tech?

Experts weigh in on the cutting-edge trends in broadcast tech, revealing how they’re shaping storytelling and revolutionising the viewer experience

The panel

- Craig Heffernan, Technical sales director, Blackmagic Design

- Peter Crithary, Vice president, live entertainment, ARRI Americas Inc

- Quentin Jorquera, Cinematographer & virtual production consultant

DEFINITION: How are advancements in camera and lens technology shaping the future of visual storytelling in broadcast and live production?

Quentin Jorquera: Equipment quality keeps improving while costs are dropping, making high-end tools more accessible. Lighter encoding now offers better image quality and colour depth without slowing down production. The use of larger sensors is bringing a more cinematic feel to broadcast, while improved sensor and lens stabilisation systems are enhancing shot stability.

We’re also seeing faster, more intelligent autofocus systems that maintain an organic feel, which is crucial for live production. Additionally, better-encoded lenses and more efficient workflows are making VFX integration and in-camera visual effects (ICVFX) smoother, allowing for more creative and seamless visual experiences.

Peter Crithary: At the forefront of this is ARRI’s proprietary colour science, REVEAL, which provides more accurate colour rendition and stable colour fidelity across the exposure range, with lifelike skin tones and precise reproduction of highly saturated colours. A prime example of shaping the future of visual storytelling is ARRI bringing the ALEXA 35 Live Multicam system to live entertainment.

The system pushes the boundaries of dynamic range and light sensitivity. With over 17 stops (equivalent to a 102dB signal-to-noise ratio), it ensures exceptional image quality even in highly challenging lighting conditions. The system captures the bright highlights and deep shadows simultaneously without sacrificing detail.

The video shader can focus entirely on crafting the image, rather than having to constantly compensate for dynamic range compression. The system will not clip the highlights – all while preserving the deepest shadow detail in the same frame, which is a typical scenario to deal with in live sporting events.

Another key area shaping the future is HDR. The ALEXA 35 Live’s real-time HDR in HLG or PQ (Perceptual Quantizer) allows seamless integration in any SDR or HDR workflows. This delivers a more immersive visual experience, with high-contrast scenes handled effortlessly. Broadcasters benefit from accurate colour reproduction, pleasing skin tones and image consistency across diverse lighting scenarios. This makes it a game changer for live sports, concerts and large-scale productions where team and sponsor colours must be reproduced accurately.

In addition to integrating seamlessly into existing infrastructure, the ALEXA 35 Live is ready for SMPTE ST 2110, allowing uncompressed video, audio as well as metadata transport to flow into modern IP-based production environments.

On the lenses side, innovations in purpose-built large format zoom lenses let directors capture wide shots and close-ups with ease, ensuring creative control without compromising image quality. The ARRI ALEXA 35 Live’s technological advancements elevate the standard for live broadcasting, making visual storytelling more lifelike and immersive.

DEF: How do emerging technologies such as AI impact broadcast production, from camera operation to post-production?

Craig Heffernan: Blackmagic Design’s approach to incorporating AI tech in production workflows has so far been focused on improving efficiencies by removing repetitive, mundane tasks to support the creativity of the user. Our focus for AI development has been on building a machine-learning AI system into DaVinci Resolve Studio – known as the Resolve Neural Engine – that offers a range of tools and features to solve complex or repetitive tasks effectively each time the operator uses it.

For example, a new AI-based Voice Isolation tool can quickly analyse audio clips and recognise the different sources between unwanted background noises and human voices. Then, with a simple UI palette, users can suppress unwanted elements to clean up the audio and improve the clarity of voices. This task would take much longer with standard audio tools and a lot more experience.

It means users can clean up interview or location audio almost instantly.

Elsewhere, for video, our IntelliTrack AI optimises tracking and stabilisation tasks. It both recognises the objects seen in the frame and can attach parameters to them, such as automatically panning audio onto objects that move through the frame to build AI-created immersive audio. Similarly, it uses the same AI-based recognition to process object removal in frame, tracking an unwanted object in a clip and painting it out by using the background information from other frames.

These tasks would be time-consuming when done manually by a user, but can be quickly processed in DaVinci Resolve, allowing users to concentrate on the creative storytelling. Our intention with AI is to empower the creative, not replace them, so we do not have any AI-generation tools or processes that replace humans.

There are many other AI options out there if this is wanted, of course, but we see AI as an assistant and a support to workflows to build efficiency and cost savings without compromising creative output.

QJ: At the moment, AI’s impact on broadcast production seems limited, perhaps most evident in subtitling and translation. However, technologies like depth sensors are getting closer to being integrated into cameras or used externally, making live VFX integration and intelligent AF easier and more efficient.

AI can automate camera movements, track subjects and adjust settings in real time, leading to smoother, more dynamic shots without constant manual input. In post-production, AI is helping to speed up tasks like colour grading, editing and VFX work. While it’s hard to say exactly how much of this is in full use today, these tools are definitely starting to make their mark – and it’s clear that they’re poised to play a larger role as the tech matures.

Studios like Warner Bros. or Sony prohibit the use of GenAI in VFX due to the copyright issues inherent in the generation process. While the technology exists, there is still a way to go before GenAI is widely adopted in linear VFX content production.

DEF: How do you foresee the convergence of traditional and virtual production impacting the creative process and technical challenges of live broadcasts?

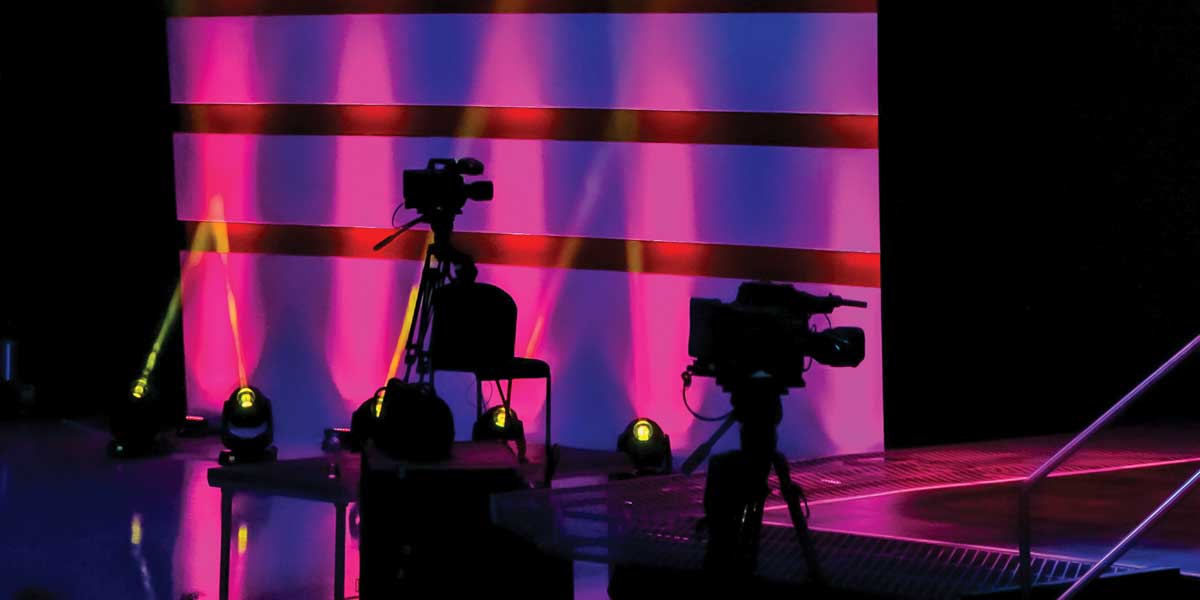

CH: We’ve been involved in a number of productions recently – specifically in sports broadcasting – where virtual elements have been integrated into live studios. A crucial technical demand encountered is one of latency between the virtual aspects and the real production space; ensuring all production tools are in sync and that they remain locked in place without error or drift is critical. Using a range of products to digitally glue both the real studio and the virtual or rendered elements together ensures customers can create a consistent and convincing hybrid environment for audience.

Likewise, we have encountered challenges when using camera tracking systems to ensure 100% accuracy and avoid lag in the processing and delivery of blended virtual and live elements. This is critical, as the movement of hosts within the frame and the camera’s perspective of the frame must be convincing to the audience when virtual elements are added. It also ensures confidence in the presenters when they interact with virtual elements.

Overall, the creative advantages outweigh the technical challenges, which are now often known and tested. As a result, we are seeing more and more productions investigating these technologies to enhance the audience experience. Sport is a perfect test space where a lot of on-screen graphics are used for game information and data. Building new and creative ways for the pundits to interact with virtual and XR elements and present this to the audience has been creatively engaging.

DEF: What challenges and opportunities do you foresee with the integration of virtual production, XR and real-time rendering into broadcast settings?

QJ: Opportunities are expanding as VP and real-time rendering technologies become increasingly accessible and affordable. Each year, live rendering quality improves significantly without a major increase in performance costs. We’re also seeing ICVFX workflows becoming more refined, making them adaptable to a broader range of use cases.

This opens the door to more interactive and immersive environments, offering flexibility as well as the ability to make adjustments on the fly. That said, there are still some challenges. These systems remain expensive and complex, requiring careful integration from pre-production onward. Pre-production planning is crucial, which isn’t always easy in live and broadcast settings.

As more tech layers are added, the risk of bugs, latency and performance issues increases. Plus, the steep learning curve for training on the various systems and pipelines can be a real hurdle for teams to overcome.

PC: ARRI’s Solutions team has developed an array of tools to address the challenges of ICVFX and workflow efficiency, focusing on colour accuracy and metadata handling for VP. Key innovations include: ARRI Color Management for Virtual Production, Live Link Metadata Plug-in for Unreal Engine and ARRI Digital Twins for previsualisation and techvis, which simulate their colour science accurately. Plus, SkyPanel X features different light engines.

One of the biggest challenges in VP is maintaining colour consistency when filming content from LED walls. ARRI’s Color Management for Virtual Production addresses this by enabling precise LED wall calibration, minimising post-production work to recover colour information. This maximises shooting efficiency and gives creative teams confidence in achieving high-quality images on set.

The ARRI Digital Twin allows for VP set-ups to be previsualised and fine-tuned before physical production. By linking real-world and virtual lighting systems, it guarantees seamless synchronisation and reduces both pre- and post-production costs.

ARRI’s Live Link Metadata Plug-in for Unreal Engine further enhances production efficiency by streaming real-time camera and lens metadata directly into Unreal Engine, enabling dynamic, real-time adjustments to virtual environments. This tool reduces manual data entry and enables smoother collaboration between on-set teams and post-production.

DEF: What are the biggest innovations in display technologies that are influencing the future of broadcast environments?

PC: ARRI developed REVEAL to significantly enhance image quality, capture colours more accurately and closer to the human visual perspective, and maintain the same colour tones for SDR and HDR – while at the same time, significantly increasing workflow efficiency.

ARRI’s solution divides the creative render transform from the display render transform, acknowledging that today’s displays cannot fully capture the range of colours and detail our cameras can. This flexibility allows us to future-proof our systems, ensuring that as display technology improves, the captured images will retain their artistic integrity.

Our cameras also support simultaneous SDR and HDR output, enabling broadcasters to cater to various display capabilities without compromising image quality.

QJ: One of the most exciting innovations right now is GhostFrame technology, which lets each camera capture different content from the same LED wall, opening up possibilities in broadcast. On the LED panel front, RGB cyan and RGB cyan-amber configurations are being tested to improve colour rendering, both for in-camera displays and as lighting sources, delivering a more accurate visual experience.

We’re also seeing rapid progress in deployable LED displays. Smaller manufacturers are developing flexible solutions such as LED ‘rugs’ and curtains that are quick to set up and remarkably durable. While they’re not at broadcast standards yet, the potential for these on-location solutions is hard to ignore once they are ready.

DEF: What trends do you see driving the evolution of workflows in broadcast production?

QJ: Cloud-based workflows have become a game changer, offering greater flexibility and efficiency in broadcast production. VP solutions are also transforming the landscape, allowing for more dynamic and immersive content creation. AI is automating a lot of the production pipeline – from camera operations to post-editing – making processes much faster and more efficient.

Another trend that’s here to stay is the use of smartphones, particularly iPhones, by journalists for live broadcasting on location. It’s a simple and portable solution that continues to be a reliable tool in the field.

PC: The shift towards video over IP and SMPTE ST 2110 is revolutionising broadcast workflows. SMPTE ST 2110 is resolution, colour-space and frame-rate agnostic, allowing for greater flexibility in multicam environments. Once video is transferred over IP, it can be seamlessly shared across decentralised locations to improve workflow efficiencies, significantly reducing the complexity of traditional broadcast infrastructures.

DEF: What future trends or technologies do you believe will have the biggest impact on the quality and capabilities of broadcast production?

QJ: The integration of depth sensors and camera tracking directly into broadcast cameras could be a game changer, making real-time compositing and tracking far more seamless and efficient. AI-driven live compositing and relighting also hold huge potential, allowing for dynamic adjustments on the fly, enhancing both the quality and efficiency of broadcast production. These technologies will push the boundaries of what’s possible in live environments, improving visual quality while streamlining workflows.

PC: Camera tech is already surpassing the capabilities of current display technologies. As display technologies evolve, we will see improvements in the ability to fully realise the creative vision captured. The gap between what is seen in-camera and what is displayed will narrow, allowing viewers to experience the full imaging experience, especially for colour fidelity, specular highlights and dynamic range. This shift will enhance the quality of broadcast production and audience engagement.

This round table was first published in the November 2024 issue of Definition.